Jinbin Bai

I received B.S. in Computer Science from Nanjing University and high school diploma from

the Affiliated High School of Shanxi University. After that, I studied at CS Dept.

of National University of Singapore and founded MeissonFlow Research (See Organization Card for more details) for

developing masking paradigm in generative modeling. I have been working with Prof. Shuicheng Yan and Prof. Ming-Hsuan Yang.

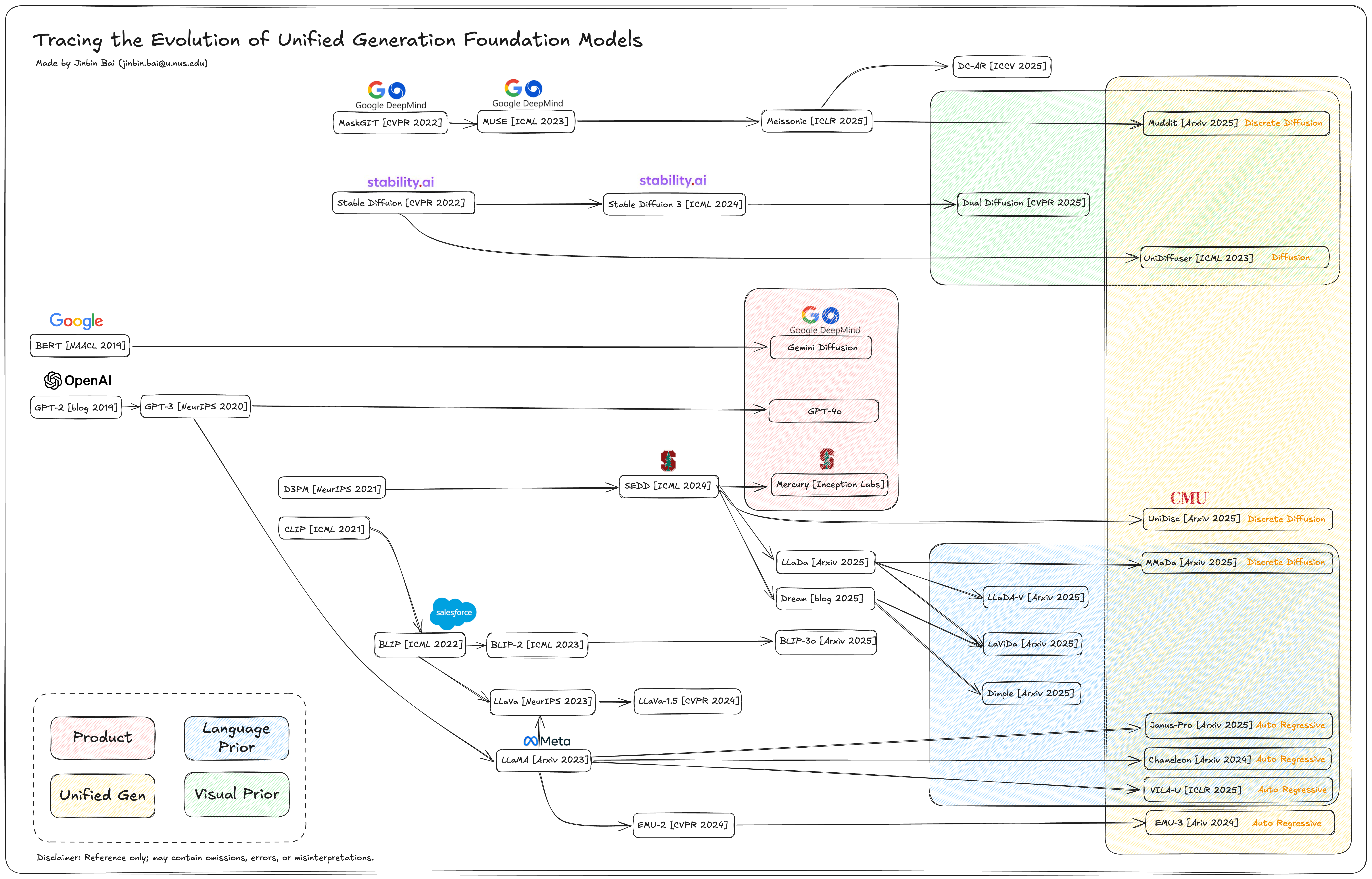

I am trying to find ways to build interactive video models and algorithms for content

creation. I want to build the world with visual prior, though i sadly agree that the

language prior dominates current unified models.

Email /

Google

Scholar /

Github /

Hugging Face /

X

|

Jinbin in Cambridge, UK, 2025.

|

News

- 2026-02 Three papers accepted by CVPR 2026.

- 2026-02 Invited Talk from Ploutos Team.

- 2026-01 One papers accepted by ICLR 2026, see you in Rio de Janeiro, Brazil!

- 2025-09 Two papers accepted by NeurIPS 2025.

- 2025-06 Two papers accepted by ICCV 2025.

- 2025-04 One paper accepted by CVPR 2025 AI for Content Creation Workshop.

- 2025-04 One paper accepted by IJCAI 2025.

- 2025-04 Invited Talk from Riot Video Games.

- 2025-03 Awarded Frontier Top Ten Young Scholars Award (1st) from Century

Frontier Asset Management.

- 2025-03 Invited Talk from University of Illinois Urbana-Champaign (UIUC).

- 2025-02 One paper accepted by CVPR 2025.

- 2025-01 One paper accepted by ICLR 2025, see you in Singapore!

- 2024-12 One paper accepted by AAAI 2025.

- 2024-11 Invited Talk from Safe SuperIntelligence (SSI) Club.

- 2024-04 One paper accepted by IJCAI 2024, see you in Jeju!

- 2023-08 One paper accepted by BMVC 2023.

- 2023-07 Two papers accepted by ACM MM 2023.

- 2023-07 Two papers accepted by ICCV 2023.

- 2023-06 Taming Diffusion Models for Music-driven Conducting Motion

Generation accepted by AAAI 2023 Summer Symposium, with Best Paper

Award.

- 2023-05 One paper accepted by ICIP 2023, see you in Kuala Lumpur!

- 2023-02 Translating natural language to planning goals with

large-language models now on arxiv.

- 2022-11 One paper accepted by ACCV 2022.

- 2022-06 LaT: Latent Translation with Cycle-Consistency for Video-Text

Retrieval now on arxiv.

- 2021-03 Awarded as Outstanding Graduate by Nanjing

University.

- 2019-03 Awarded as Outstanding Student by Nanjing

University.

Selected Publications (See Google Scholar for full list)

|

|

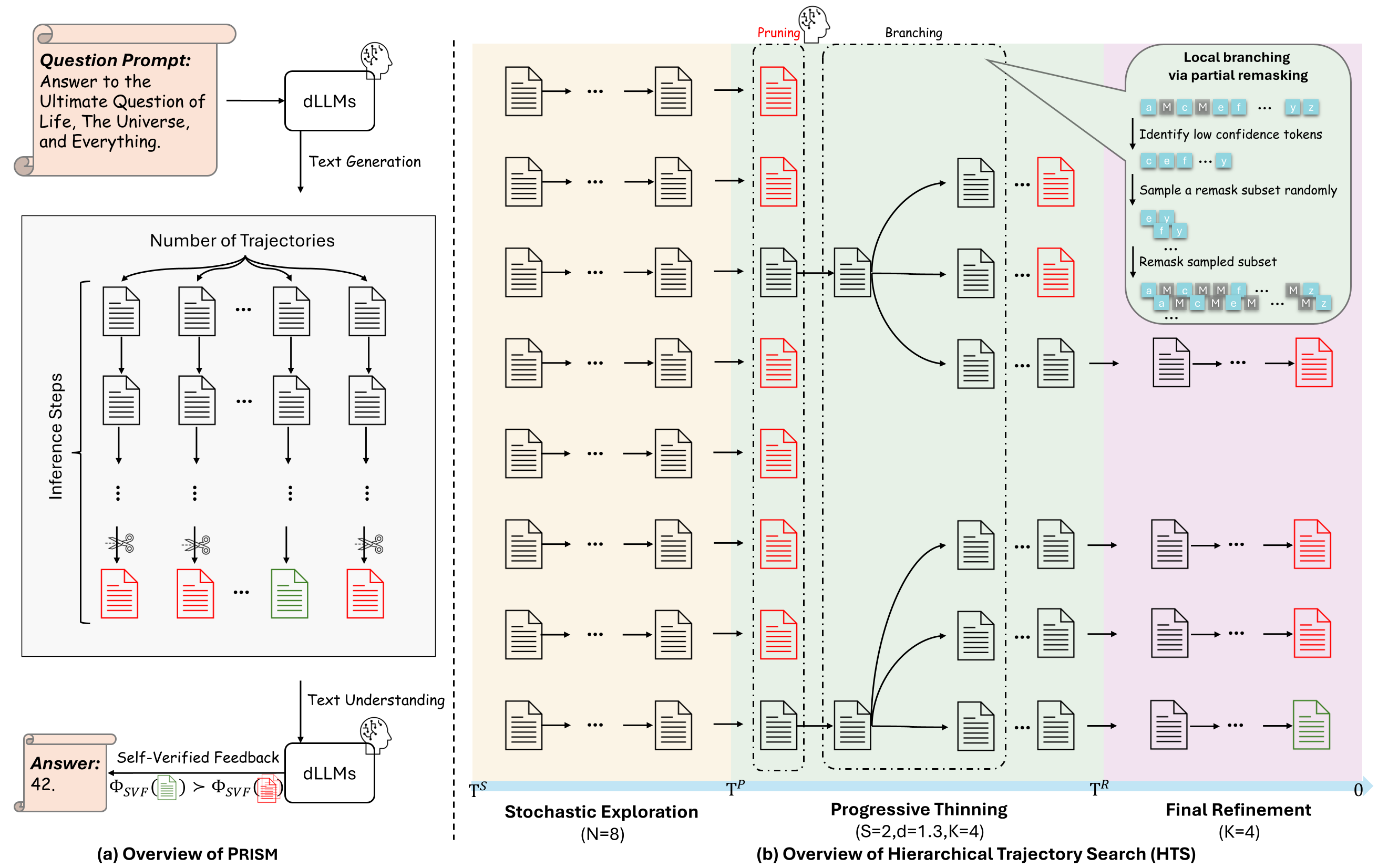

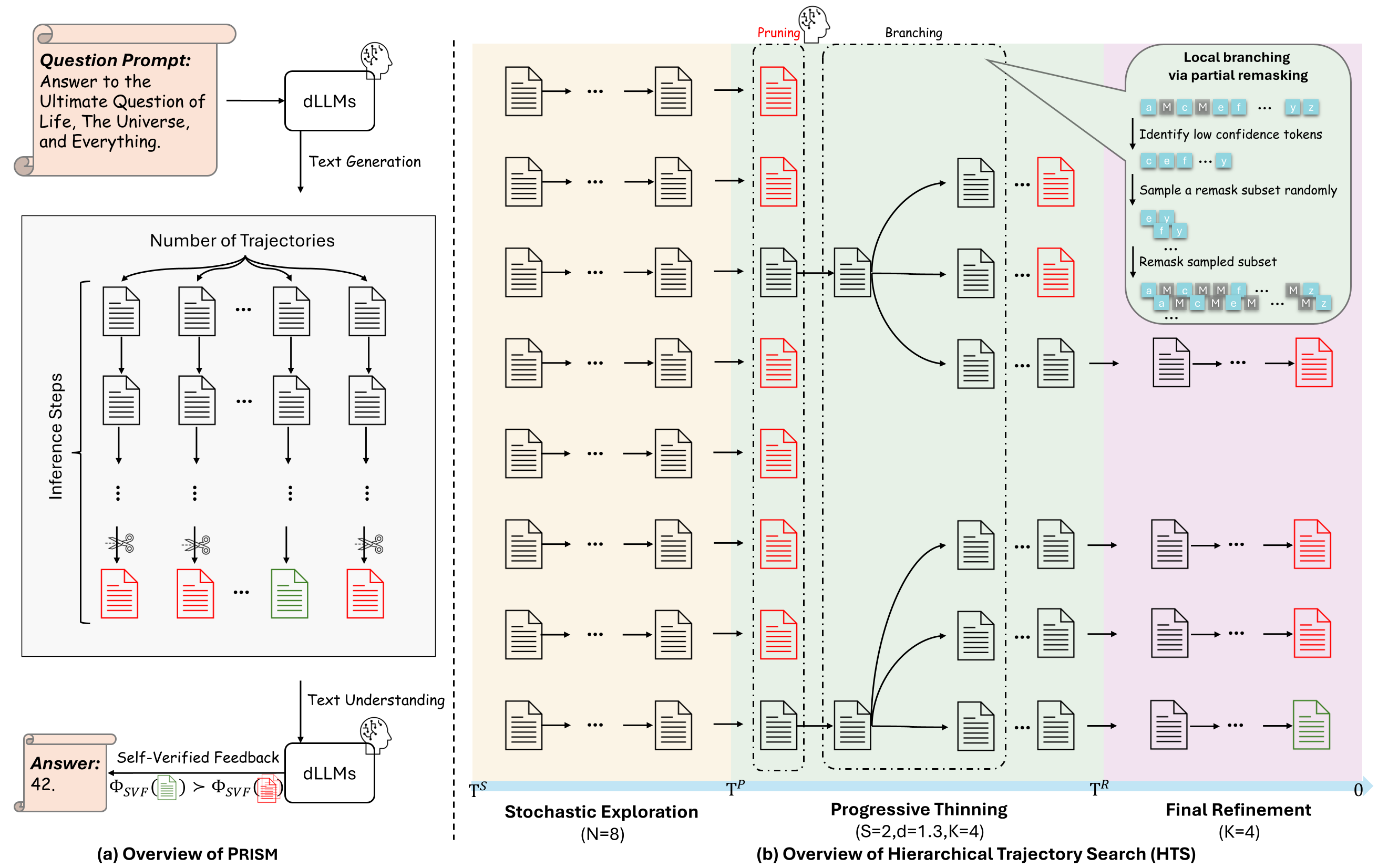

Prism: Efficient Test-Time Scaling via Hierarchical Search and Self-Verification for Discrete Diffusion Language Models

Jinbin Bai, Yixuan Li, Yuchen Zhu, Yi Xin, Qingyu Shi, Aosong Feng, Xiaohong Liu, Molei Tao, Jianru Xue, Xiangtai Li, Ming-Hsuan Yang

Technical Report 2026

[Paper]

[GitHub]

[Media_Report_CN]

An efficient test-time-scaling method by pruning with self-verifier and branching with remasking for masked diffusion models to unlock their full generative potential. Works well for both image generation and text generation!

|

|

|

|

|

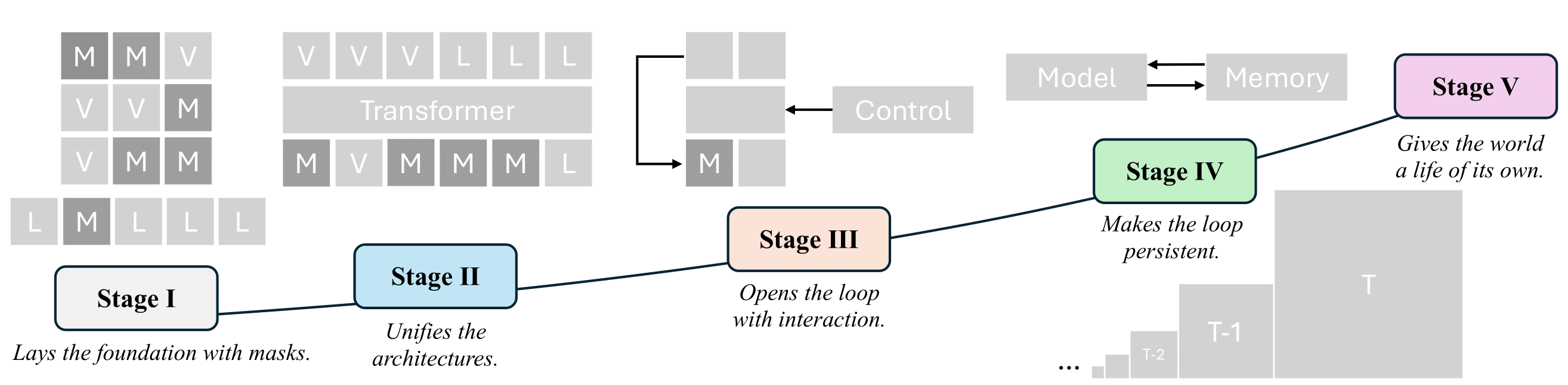

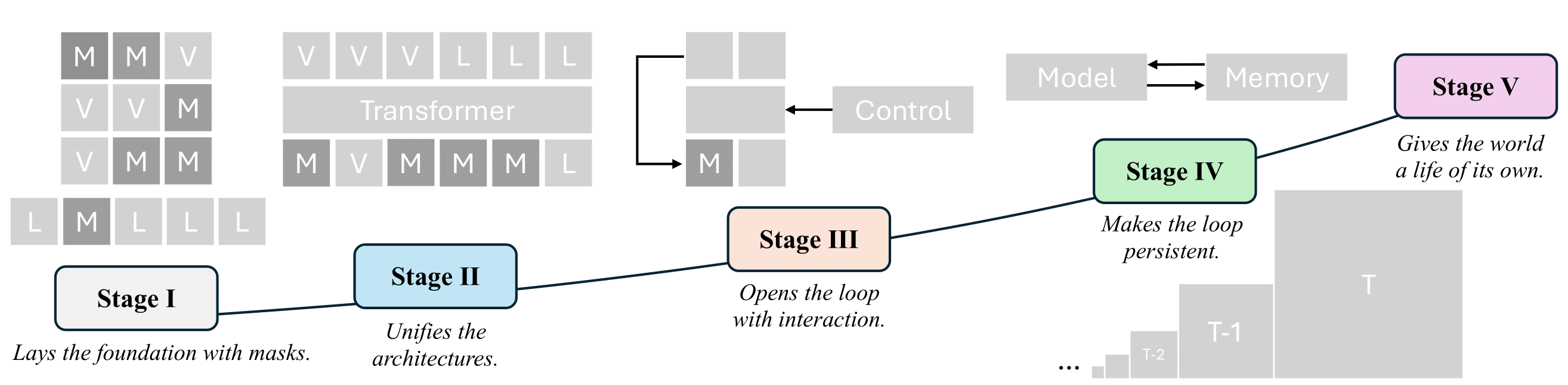

From Masks to Worlds: A Hitchhiker’s Guide to World Models

Jinbin Bai, Yu Lei, Hecong Wu, Yuchen Zhu, Shufan Li, Yi Xin, Xiangtai Li, Molei Tao, Aditya Grover, Ming-Hsuan Yang

Technical Report 2025

[Paper]

[GitHub]

[Media_Report_CN]

[YouTube_EN]

[YouTube_KO]

A Hitchhiker’s guide for those who want to build worlds. We follow one clear road: from early masked models, to unified architectures that share a single paradigm, then to interactive generative models, and finally to memory-augmented systems that sustain consistent worlds over time.

|

|

|

|

|

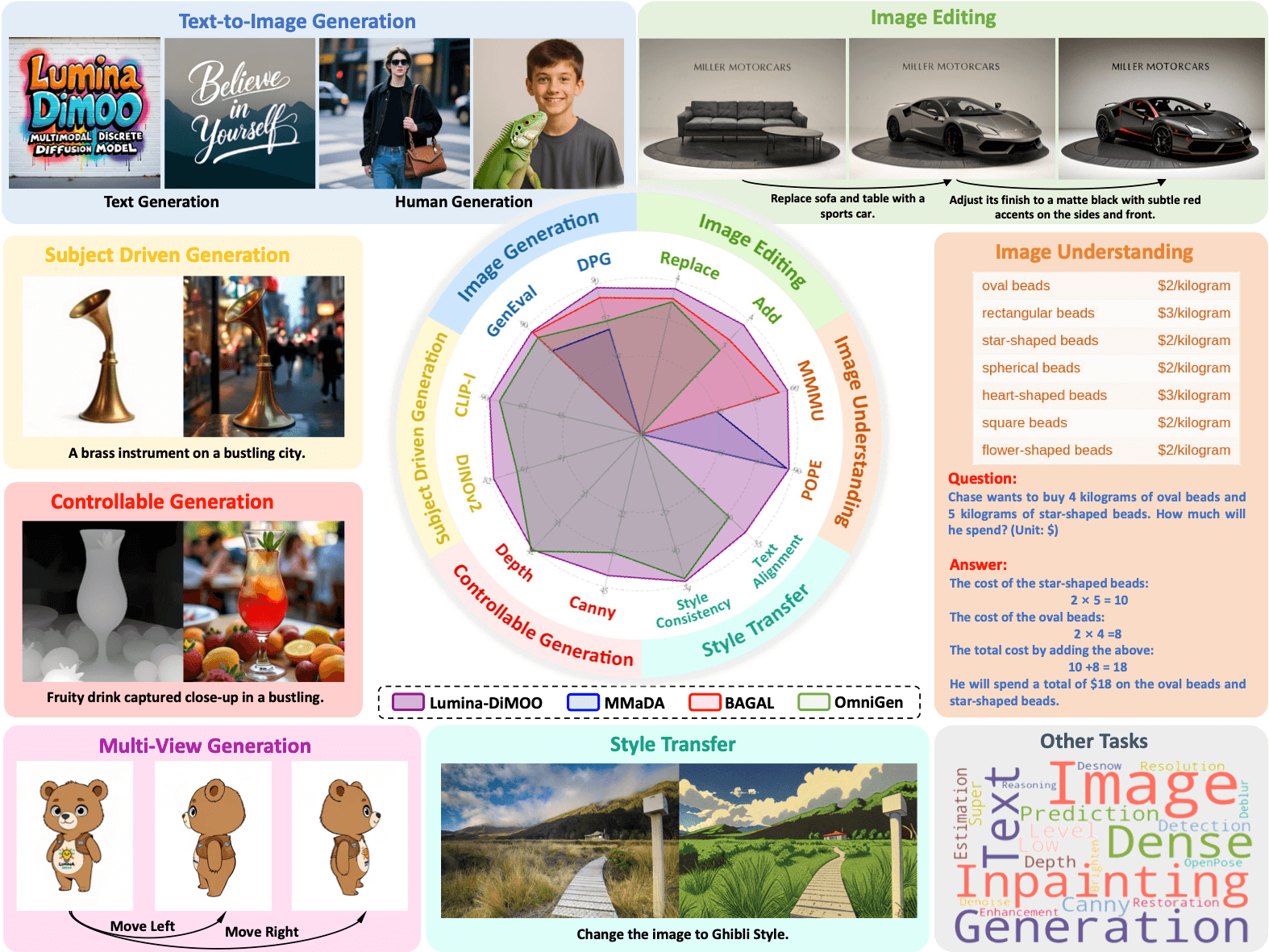

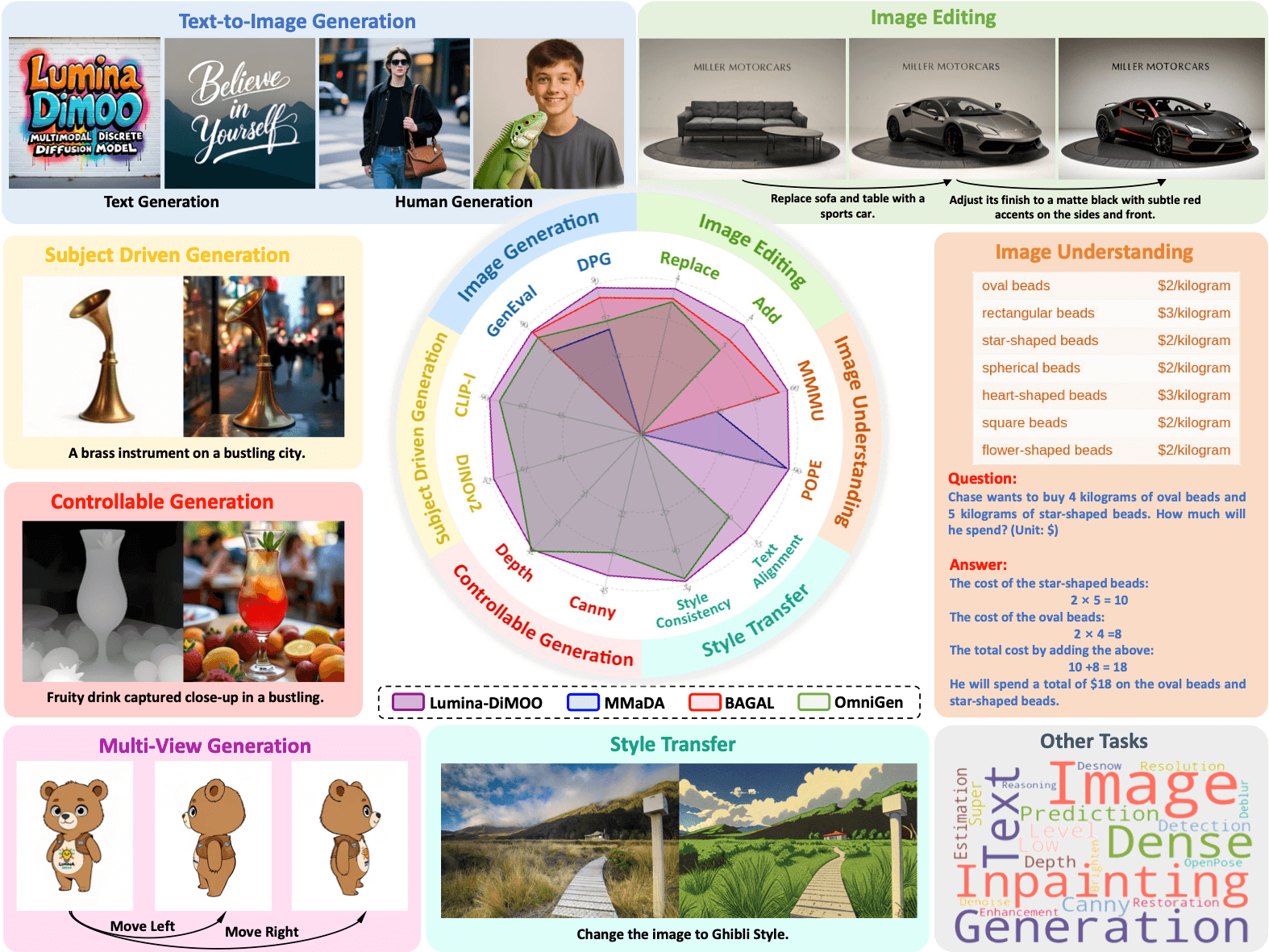

Lumina-DiMOO: An Omni Diffusion Large Language Model for Multi-Modal

Generation and Understanding

Yi Xin, ..., Jinbin Bai, ... (Alpha VLLM Team)

Technical Report 2025

[Paper]

[Model]

[Code]

Lumina-DiMOO is a unified masked diffusion model that can not only

generate high-resolution images, but also support multimodal capabilities

including text-to-image, image-to-image, and image understanding. SOTA performance with novel application Interactive Retouching!

|

|

|

|

|

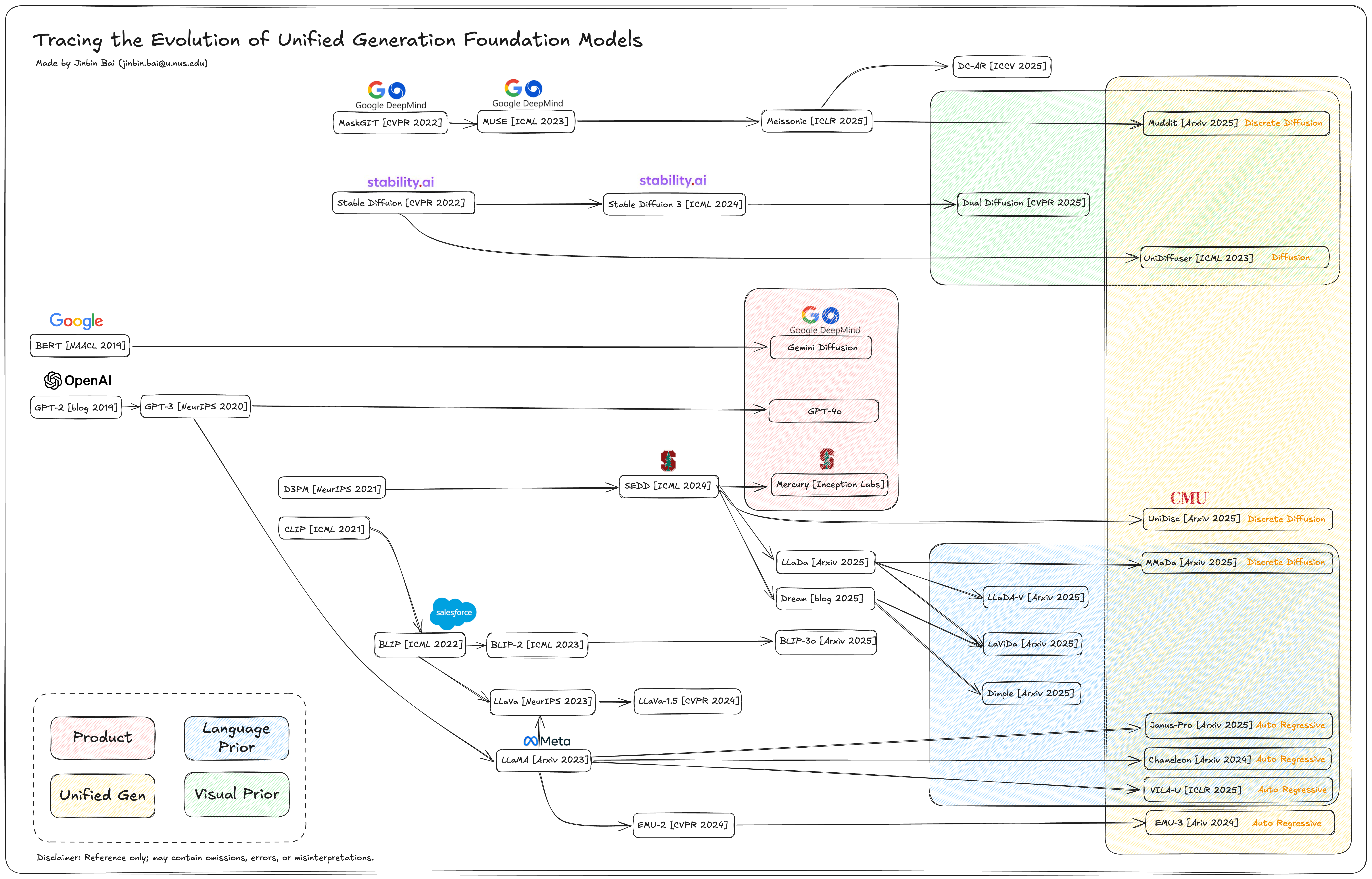

Muddit: Liberating Generation Beyond Text-to-Image with a Unified Discrete Diffusion Model

Qingyu Shi*, Jinbin Bai*, Zhuoran Zhao, ..., Shuicheng Yan (* denotes equal contribution)

ICLR 2026

[Paper]

[Model]

[Code]

Muddit (offical Meissonic II) is a unified masked diffusion model that can not only

generate high-resolution images, but support multimodal capabilities

including text-to-image, image-to-text, and VQA. We verified one unified model can be trained from visual prior learned by Meissonic!

|

|

|

Miscellaneous

- I am a huge fan of Cities: Skylines and I love designing and simulating cities. I can't

wait for the release of Cities: Skylines II on Oct 24th, 2023! And, I've attended World Cities Summit

(WCS) 2024 Conference!

- My favorite movies in recent years is Free Guy, and I dream of designing a game like

this.

- I enjoy traveling and have visited 16 countries, guess where I have been?

- I like swimming, diving, surfing, beach under the sunshine.

|